Jan 21, 2026

3

min read

Cade Whitborn

,

Head of Strategy and Customer Success

The AEO Data Moment Has Arrived - Sort Of

For months, digital marketers have been watching the explosion of user activity in ChatGPT, Perplexity, Claude, and other LLM applications while lacking any clear way to measure how their digital properties perform in this new landscape. Traditional SEO tools could tell you everything about Google rankings, but nothing about whether Claude was recommending your services or ChatGPT was citing your content.

That’s now changing. A new category of AI Engine Optimisation (AEO) tools is emerging, promising to bring the same rigour to LLM visibility that we’ve long had for traditional search. But as with any nascent discipline, the metrics are immature, the methodologies vary wildly, and the gap between what these tools measure and what matters is worth examining closely.

This post examines two tools I have been using in this space: Sitecore’s AEO/SEO Researcher agent (part of SitecoreAI) and SEMrush’s AI Visibility Toolkit. I tested both using our own website, codehousegroup.com, as the subject. Both tools aim to help you benchmark, track, and improve your AI optimisation performance - but they take different approaches that reveal something important about where this discipline currently stands.

Two Philosophies of AEO Measurement

Before diving into features, it’s worth understanding the conceptual difference between these tools:

Sitecore’s agent measures citation readiness - how prepared your website content is to be cited by LLMs. This is predictive. It audits your site against factors believed to influence whether AI systems will surface your content.

SEMrush AI visibility toolkit measures visibility - how often your brand actually appears in AI-generated answers. This is descriptive. It tracks real mentions across LLM platforms.

These are complementary but distinct questions. You can score highly on readiness while scoring poorly on actual visibility - and that gap is where things get interesting.

Sitecore AEO/SEO Researcher: The Audit Approach

Sitecore’s AEO/SEO Researcher is one of 20 built-in agents included with a SitecoreAI subscription. According to Sitecore, it “helps you discover high-intent keywords, semantic clusters, and answer engine optimization opportunities to improve your content’s search visibility and AI citation readiness.”

What It Does

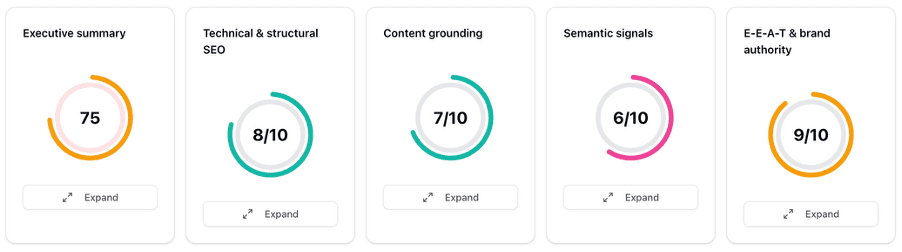

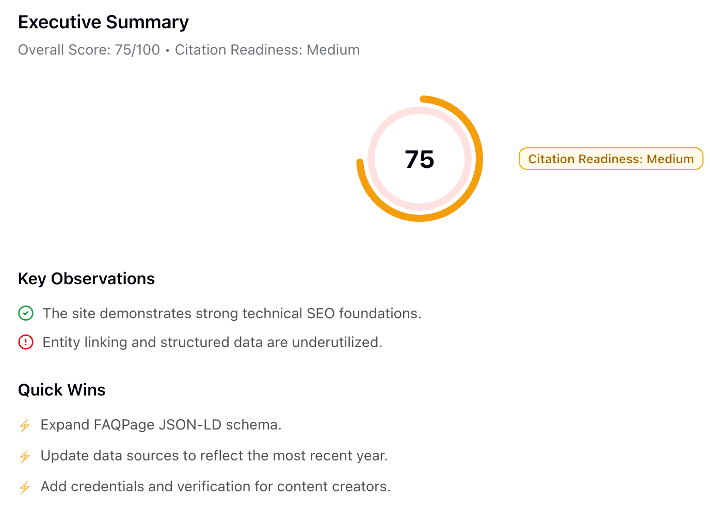

You provide a URL (and optionally keywords or a topic focus), and the agent generates a report with an overall ‘Citation readiness’ score out of 100. For codehousegroup.com, the tool returned a score of 75, classified as ‘medium’ citation readiness.

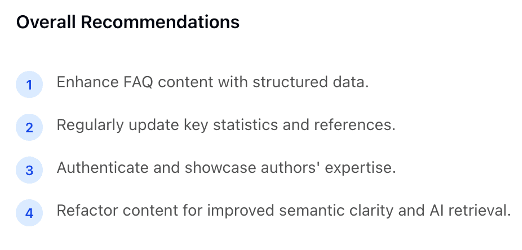

The report covers multiple dimensions: technical, content structure, semantic clarity, and UX signals, and provides recommendations for improvement.

What I Found

To test whether the tool was genuinely analysing site-specific characteristics, I ran audits on three very different domains: our agency site (codehousegroup.com), Sitecore’s own corporate site (sitecore.com), and SEMrush’s site (semrush.com). These represent very different content profiles, audiences, and purposes - a digital experience agency, an enterprise software company, and a marketing technology platform.

The results raised questions. All three sites received near-identical top-level scores. While the detailed commentary showed some variation - different specific observations and recommendations - the overall metrics were remarkably similar across these distinctly different websites.

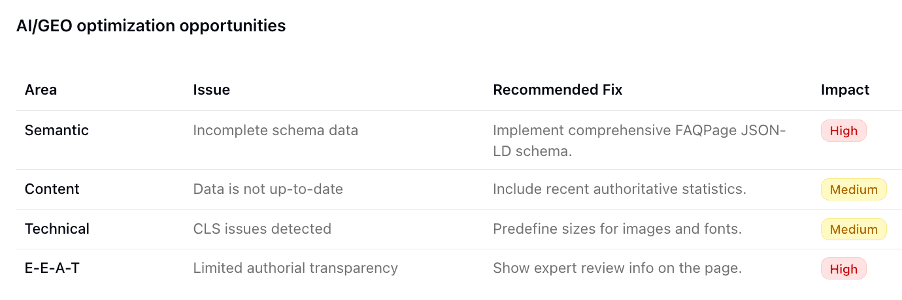

This suggests the tool may be pattern-matching against generic heuristics rather than performing nuanced, site-specific analysis. The recommendations reinforce this impression - they gravitate around common themes like adding structured data through additional schemas and updating older dated references.

The Actionability Question

Some recommendations feel too abstract to act on. “Refactor content for improved semantic clarity and AI retrieval” sounds sensible, but what does it mean in practice? Similarly, “content lacks entity-based grounding links” requires further research to understand, let alone implement.

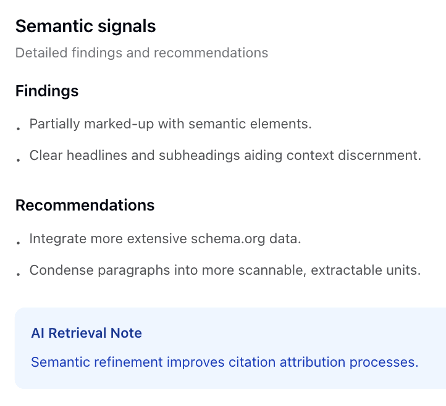

The language throughout the report can feel unnatural. Phrases like “clear headings and sub-headings aiding context discernment” simply means “good use of headings to make content easy to understand.” This AI-generated verbosity occasionally obscures rather than clarifies.

Practical Considerations

The tool is included free with SitecoreAI, making it accessible for existing Sitecore customers. However, I encountered several usability issues: export and copy functionality that didn’t work, a buggy interface that prevented full-window viewing, and inconsistent behaviour including repeated ‘Failed to complete tasks’ messages despite tasks appearing complete. The interface and functionality also changed during my testing period, suggesting active development but also instability.

SEMrush AI Visibility Toolkit: The Measurement Approach

SEMrush’s AI Visibility Toolkit takes a different tack. According to SEMrush, it “shows you how brands appear in AI-generated answers, helping you measure a new layer of visibility beyond traditional search. This toolkit is designed for SEOs and marketers who want to monitor how their site and competitors are positioned across AI systems.”

What It Does

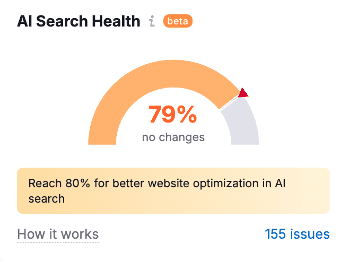

SEMrush provides two primary metrics: an AI Visibility score reflecting actual presence in AI-generated search results, and an AI Search Health score based on technical checks including whether AI search bots are blocked.

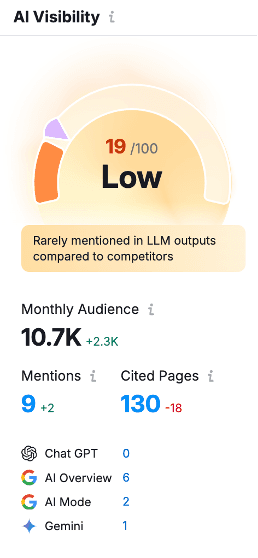

For codehousegroup.com, SEMrush returned an AI Visibility score of 19/100. The AI Search Health score was 79%, more comparable to Sitecore’s assessment.

What I Found

SEMrush provides tangible data points that Sitecore lacks: number of mentions, number of cited pages, and a breakdown by LLM platform. These are concrete metrics you can track over time.

I can’t independently verify the accuracy of this reporting, but the specificity feels more grounded than audit-based predictions.

More Specific Recommendations

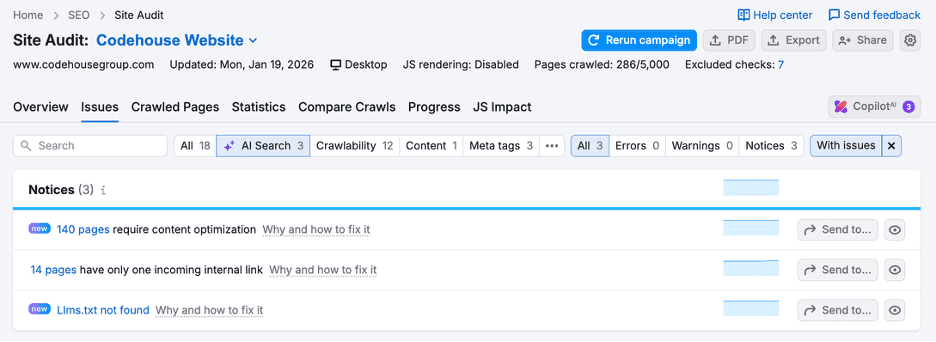

When it comes to improvement actions, SEMrush provides more limited but more specific guidance. For codehousegroup.com, it identified 140 pages requiring content optimisation, 14 pages with only one incoming link, and the absence of an llms.txt file.

Crucially, the content optimisation recommendations detail the specific issue for each page: poor heading hierarchy, low readability, insufficient readable content, or excessive content complexity. This specificity enables prioritisation and action.

A Note on llms.txt

SEMrush flags the absence of an llms.txt file as an issue. It’s worth noting that llms.txt is an experimental format with very limited real-world adoption and minimal crawler support. There is currently no public commitment from major LLM providers - OpenAI, Google, Anthropic, or Microsoft - to respect or systematically fetch llms.txt files for crawling or indexing. Implementing it won’t hurt, but its impact is currently theoretical.

Practical Considerations

SEMrush’s AI Visibility Toolkit requires a paid subscription. The toolkit is comprehensive, offering additional capabilities around prompt research and competitor analysis, though navigating across various sub-tools requires effort to assemble a complete picture. Features like AI Search Health are labelled ‘beta’, acknowledging the evolving nature of this space.

The Gap That Matters: 75 vs. 19

The most revealing finding from this comparison isn’t any individual feature - it’s the disconnect between the two scores. Sitecore says codehousegroup.com has 75% citation readiness. SEMrush says it has 19% actual AI visibility.

This 56-point gap suggests several possibilities:

Our optimisation techniques are imprecise. The factors we believe influence LLM citations may not correlate strongly with actual citation behaviour. We’re optimising for proxies, not outcomes.

Prompt alignment matters more than site quality. Visibility may depend less on your site’s technical readiness and more on whether your content aligns with the high-volume prompts people actually ask LLMs.

The metrics themselves are immature. Both tools are essentially inventing their own measurement frameworks. There’s no industry consensus on what ‘good’ looks like, how to weight different factors, or even what should be measured.

This feels a lot like SEO in its early days, when practitioners chased PageRank and keyword density without fully understanding Google's algorithms. The risk is the same: we optimise for metrics rather than outcomes, creating content to attract citations rather than to serve the audience we already have.

Practical Recommendations

Given the current state of these tools, here’s how I’m approaching AEO measurement with clients:

Use multiple tools for triangulation. Neither tool alone gives a complete picture. I combine Sitecore (for audit-based readiness), SEMrush (for actual visibility tracking), and Screaming Frog (for technical SEO fundamentals) to establish baselines and track progress.

Prioritise SEMrush for benchmarking over time. Its focus on actual mentions provides a more concrete baseline. The number of mentions and cited pages are metrics that directly reflect real-world outcomes.

Use Sitecore for directional guidance, not precise measurement. Given the identical scores across different sites, I have low confidence in moving these specific metric needles. However, the recommendations can prompt useful thinking about content structure and schema markup.

Focus on fundamentals. Clear content structure, accurate information, good readability, and comprehensive coverage of topics your audience cares about - these matter for both traditional SEO and AEO, regardless of how imprecise our measurement tools are.

Don’t over-invest in unproven standards. Implementing llms.txt is low-effort, but don’t expect it to move the needle until major LLM providers commit to supporting it.

Where This Is Going

Both tools are clearly works in progress. SEMrush has beta features and Sitecore’s agent changed during my testing period. This reflects the reality that AEO as a discipline is still finding its footing.

The questions worth asking over the next twelve months: Will the gap between ‘readiness’ and ‘visibility’ narrow as our understanding improves? Will industry-standard metrics emerge? Will major LLM providers offer their own visibility data, the way Google eventually provided Search Console?

For now, tools like these represent our best available options for bringing data to what has been a data-poor area of digital marketing. Use them together, interpret their outputs critically, and remember that the fundamentals of good content have always mattered more than gaming any specific algorithm - AI or otherwise.

Let’s Continue the Conversation

AI is reshaping how audiences discover and engage with brands - and the playbook is still being written. If you’re grappling with how to optimise your digital experience for the age of AI, we’d love to hear from you.

At Codehouse, we’re actively exploring these questions with our clients: How do you measure what matters? Where should you invest your optimisation efforts? How do you future-proof your digital presence as LLMs become a primary discovery channel?

Get in touch via hello@codehousegroup.com to continue this important and timely discussion. Whether you’re looking to benchmark your current AI visibility, develop an AEO strategy, or simply make sense of the rapidly evolving landscape, we’re here to help.